On building my personal LLM benchmark

Over the past week, I’ve experimented upon building a personal LLM benchmark that tests models based on my specific tastes and requirements. I’d definitely encourage anyone to make their own suites, but it’s very involved. I’ll probably write about my experience soon in an actual blog post…

Anyway, upon investigating my test-cases, I learned that most of the things I care about are a matter of taste and style. For example, I prefer matplotlib code to be done a certain way (using fig, ax = plt.subplots(...) vs direct plt) or concise responses over verbose ones. I wonder if there’s a way we can incorporate these personal preferences during finetuning (assuming we have the resources to do so) with zero-to-few human annotation effort.

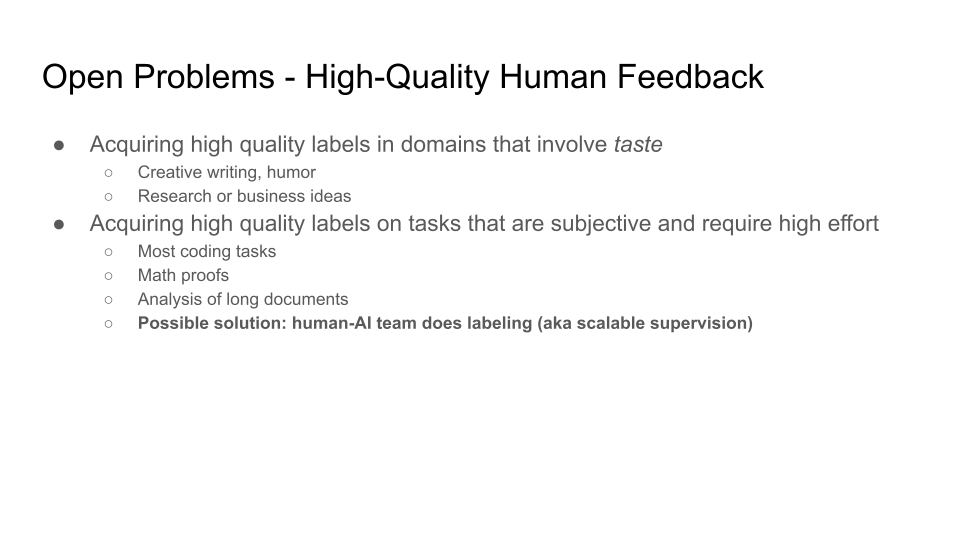

This reminds me of a slide from John Schulman’s talk on open problems in High-Quality Human Feedback (we actually took a stab on this problem in a previous work):

Again, this is something I want to write more about in the future. Still organizing my thoughts on this. And by the way, seems like Qwen/Qwen2.5-14B-Instruct-1M is the best model in my personal benchmark so far when accounting for performance and cost-efficiency.